COA_Spring2013_HWK1

Match the statement with the correct value from the table.

1.1.1. Computer used to run large problems and usually accessed via a network: 3) Servers. Size and network connectivity lead me to believe that this a dedicated computer that is capable of handling large-size problems, and is not logged into such as a desktop PC, but is mainly accessed via a network.

1.1.2: 10^15 or 2^50 bytes: 7) Petabyte: a quadrillion bytes is AKA a petabyte, and is 1000 Terabytes, a million Gigabytes, a billion Megabytes or a trillion kilobytes (9.766×1011 KiB (kibibytes)). Going the other way, this is exabytes, zetabytes and yottabytes for each subsequent degree of magnitude, scaled to base 10.

1.1.3: A class of computers composed of hundreds to thousands of processors and terabytes of memory and having the highest performance and cost: 5) Supercomputers: A defining feature of supercomputers is stripped-down, simplified instruction sets that enable massively efficient and fast computation, large size memory, and massive numbers of processors. Originating from Seymour Cray’s initial CDC designs circa 1970s, of only a few processors, today’s supercomputers can have tens of thousands of processors. The current top-dog is China’s Tianhe-2 that is capable of 33.86 petaFLOPS.

1.1.4 Today’s science fiction application that probably will be available in the near future: 1) Virtual worlds: Virtual worlds are an application that creates a virtual reality using digital simulation. As we witness advancements in wearable technology, such as biofeedback devices eg FitBit, Fuelband, etc; humans as an interface e.g. smart pills and electronic tattoos that can be used for bio-authentication (http://threatpost.com/former-darpa-head-proposes-pills-and-tattoos-to-replace-passwords) or tracking; and augmented reality such as Google Glass, the next big leap will probably meld the digital world much closer into the current physical “reality”.

1.1.5 A kind of memory called random access memory: 12) RAM. The definition is in the question. Data stored randomly in memory, while sacrificing the order that is seen in more structured storage such as hard drives, presents the benefit of fast access times.

1.1.6. Part of a computer called central processor unit: 13) CPU. Another answer in the question, the CPU is, effectively, the brain of a computer. Within this brain, just as in humans where there are specialized regions that perform different functions, e.g. response inhibition, flight-or-flight, logic and reasoning, vision, etc, there are specialized regions within the computer’s ‘brain’. A key part of the CPU is the Arithmetic and Logic Unit, where all the magic that differentiates a computer from a calculator happens, that is simplifying complex actions into simple interpretative functions and returning output fast.

1.1.7. Thousands of processors forming a large cluster: 8. Data Centers. A data center comprises of a large number of processors that are known as clusters. The key words are redundancy (both power and data) and environmental control. Modern data centers are typically islands that have backup generators and alternative power supplies, as well as state of the art cooling and fire suppression equipment.

1.1.8. Microprocessors containing several processors in the same chip: 10) Multicore processors. A core is another name for a central processing unit. A multicore processor has multiple cores, or multiple processing units that can run in parallel, i.e. can run multiple instructions at the same time, as opposed to a solitary core that can only run one instruction at a given time, even though its such a small time frame that it appears instantaneous, and therefore appears to do many things in parallel. These multiple cores can either be mounted on a single integrated circuit die, or on multiple dies on a single chip. At the cutting-edge is the decacore, 10 core Intel Xeon E7-8870 (http://ark.intel.com/products/53580).

1.1.9. Desktop computer without a screen or keyboard usually accessed via a network: 4) Low-end servers. Unlike supercomputers, low-end servers utilize clusters of “off-the-shelf” processors (a la over the counter medication) to try and rival the performance of their high-end counterparts that are a lot more expensive.

1.1.10. A computer used to to run one predetermined application or collection of software: 9. Embedded computer. Usually a smaller dedicated computer system within a larger system, that usually has real-time constraints. It needs to perform one (or very few) functions quickly and efficiently, unlike a desktop computer that can perform millions of operations with a not-as-hard deadline. Design can be optimized for size and speed due to lack of other factors to worry about, such as sharing resources. Examples range from watches, to traffic lights and factory controller equipment, to larger systems such as MRI machines and avionics (aircraft electronic systems).

1.1.11. Special language used to describe hardware components: 11) VHDL. Actually, VHSICDL, or Very High Speed Integrated Chip Definition Language: It is a hardware description language used in electronic design automation. It describes digital and mixed-signal systems. A HDL (hardware description language) is a specialized language that enables formal description of an electronic circuit, allowing automated analysis, simulation, testing of the circuit, and compilation into a lower level specifications. Unlike programming languages like C, a HDL explicitly includes the notion of time. IEEE Standard 1076 defines VHDL, from the original brainchild of the US D.O.D. that borrowed heavily from the Ada programming language, to its modern iteration as a building block of I.C.s.

1.1.12. Personal computer delivering good performance to single users at low cost: 2) Desktop computers. A desktop computer, or personal computer (PC), is what most people typically associate with the word “computer”. It consists of a monitor, keyboard and mouse for user IO, and the tower that houses optical and hard drives as well as the processor units that enable arithmetic and logic. Floppy disks are antequated, as are the drives that were associated with them. There is also typically a microphone and audio-out port that enables communication and media playing.

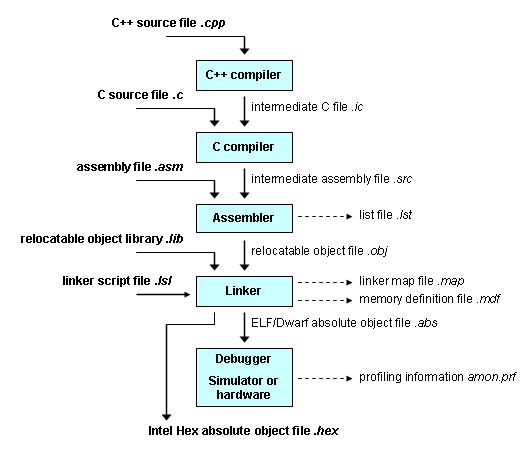

1.1.13. Program that translates statements in high-level language to assembly language: 15) Compiler. It translates (human-readable textual) high-level source code in a programming language, into a lower-level, binary language or object or machine code. There are corss-compilers (can run on different operating systems or processors), decompilers (translates to a higher level language), language/source-to-source translators or language converters (translates between high-level languages), and language rewriters (translates expressions without a change of language).

1.1.14. Program that translates symbolic instructions to binary instructions: 21. Assembler. An assembler translates assembly instructions into machine language instructions that consists of operation codes aka opcodes, and resolves lexical identifiers/labels for memory locations. This action assembles or creates object code, and this action saves a lot of time and effort. There are 2 types: one-pass or multi-pass (create a table of symbol- value tables on the 1st pass, and then generate code on subsequent passes) assemblers. One pass assemblers were more common-place in older computers that had a high cost for rewinding/ rereading physical tape, or rereading cards, which more than one pass would adversely affect speed. Contemporary assemblers don’t have this issue, and benefit from multiple passes by attaining an absence of generated errata.

1.1.15. High-level language for business data processing: 25) Cobol. An acronym for Grace Hopper’s COmmon Business-Oriented Language, COBOL is a simple, verbose language that is targeted for corporate/ governmental business, finance and administrative systems. It has benefits of being straightforward and highly portable, but is crippled with lack of structure and verbosity. It was developed in the Cold War during the US-USSR Arms Race, the equivalent of the Address programming langauge used in Soviet ballistic missiles. After being modernized because it was susceptible to the Y2K problem, there is an Object-Oriented extension library being incorporated into it.

1.1.16. Binary language that the processor can understand: 19) Machine language. It is a set of instructions to be executed by a central processing unit and involves actions such as jumps, branches, loads, logical shifts, and load/ store word operations. It is the lowest-level representation of programs, and is processor class-based. An instruction is a set of bits that correspond to a different command to a machine: this specificity of patterns to commands is what causes a distinction between different processors.1.1.17 Commands that the processors understand: 17) Instruction. Instructions can be either be of similar or variable lengths. Most instructions have one or more opcode fields: opcodes (‘op’ in MIPS) specify the basic instruction (e.g. jump, branch, logical shift, etc.), the actual instruction that this corresponds to (e.g. add, compare, etc.), as well as operand type, offset, index and constant value fields.

where op = opcode, rs = first register source operand, rt = second register source operand, rd = register destination operand (that gets the result of the operation), shamt = shift amount (the number of bits to shift by), and funct = function code (selects the specific variant of the operation in the op field). The diagram above is of the R-type (register type) format, while the following diagram illustrates the I-type, or immediate type, format.

R-type formats are used for add and sub (subtract) instructions, while I-type formats are delegated to add immediate, load word and store word functions. Immediacy refers to coding the value within the instruction itself as opposed to values in implicitly- or explicitly-stored registers. The distinct set of values set in the first field, i.e. the ‘op’ field, distinguish an instruction as R-type or I-type.

1.1.18. High-level language for scientific computation: 26) Fortran. Developed by IBM in the ’50s, the Mathematical FORmula TRANslating System was developed for scientific and engineering purposes. Today, it is a popular high-performance computing language, used for benchmarking and ranking supercomputers. It is the modus operandi in computationally intensive areas such as numerical weather prediction, finite element analysis and computational science. It has adapted well while still maintaining its base functionality, with significant milestones in 1990 (modular & generic programming addition), 2003 (object-oriented addition), and 2008 (concurrent programming). The first Fortran (or rather FORTRAN, since it was the pre-1990 switch from all-caps nomenclature) compiler was produced in 1957, and was an optimizing compiler, meaning it minimized or maximized specific attributes of a program, e.g. minimizing time, power or memory.

1.1.19. Symbolic representation of machine instructions: 18. Assembly language. Assembly is a low-level language that is converted into machine-executable object code by the “affore-described” assembler.A mnemonic represents each low-level machine operation or opcode. The flow is illustrated in the diagrams above: the programmer compiles source code using the compiler, that converts it assembly code, which is subsequently assembled into object code that is then executed after being linked. A linker or link editor takes multiple object files and combines them into a single executable program.

1.1.20. Interface between user’s program and hardware providing a variety of services and supervision functions: 23) System software. System software manages and integrates a computer’s capabilities but does not directly perform tasks that benefit the user directly. System software controls computer hardware, and is the layer on which application software runs. Examples of system software are operating systems, the BIOS (basic input output software, manages data flow between IO devices and the OS), the bootloader (loads the OS into main memory or RAM), the assembler and device drivers (convert general IO instructions into messages that a device can understand and implement).

1.1.21. Software/ programs developed by the users: 24) Application software. As opposed to system software, application software enables a computer to perform tasks that will directly benefit the user. A good example is any typical application or program, e.g Solitaire, Portable Python, OpenOffice.org Writer or Wireshark.

1.1.22. Binary digit (value 0 or 1): 16) Bit. Just like we can use our fingers (digits) to count, and use a set of numerical representations of quantity (digits), a computer also processes digital input and output as a Binary digIT. A nibble, also spelled nyble, ( a la a small bite) is 4 bits and a byte is 8 bits. By combining 0’s and 1’s in arbitrarily agreed sequences, we can talk the computer’s language and get things done.

“There are 10 types of people in the world: Those who understand binary, and those who don’t.”

1.1.23. Software layer between the application software and the hardware that includes the operating system and the compilers: 14) Operating system.

1.1.24 High-level language used to write application and system software: 20) C.

C is a general purpose, imperative (procedural) programming language developed at AT&T Bell Labs in the early 70s by Dennis Ritchie. Its strength is structured programming and efficient machine instruction mapping. Many modern languages have borrowed from C, to put it lightly. It requires minimal run-time support and allows low-level memory access. It is thus named as it was developed from B, or BCPL, which is Basic Combined Programming Language (first brace {} programming language: clean, consistent, and simplified, major trend to split structure with front end parsing source code to 0-code for a virtual machine and back end translating o-code for target machine).

1.1.25: Portable language composed of words and algebraic expressions that must be translated into assembly language before run in a computer: 22) High-level language. A high level language is a highly-abstracted, human-readable programming language. The higher the abstraction from actual machine code, the higher the level of the language. A high-level language can be classified as either interpreted, compiled or translated. Interpreted languages are read and executed without compilation, e.g. ASP, ECMAScript (JavaScript & JScript), Octave, Mathematica, MATLAB, Perl, PHP, Python and Ruby. A compiled language has to be transformed into an executable state, either by machine code generation or by immediate representation and execution by virtual machines. Examples include Ada, C, COBOL, Delphi, Fortran, Haskell, Pascal, PL/I and Visual Basic. Translated languages are translated into other high-level languages that have native code compilers, i.e translates high level languages into other high-level languages that are at a lower-level or less abstracted than the originating language. C is a common target language for these types of HLLs.

1.1.26. 10^12 or 2^40 bytes: 6) Terabyte.

_________________________________________………………………..________________________________________

Exercise 1.2

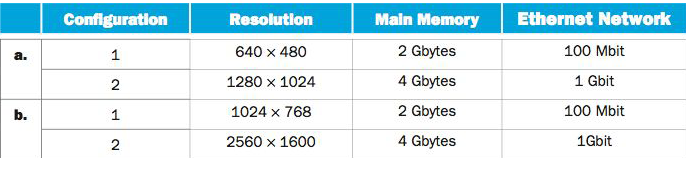

Consider the different configurations.

1.2.1. For a color display using 8 bits for each of the primary colors (RGB) per pixel, what should be the minimum size in bytes of the frame buffer to store a frame?

The frame buffer is the video memory that is used to hold the video image displayed on the screen, and depends on the resolution of the screen and the color depth per pixel. Each pixel is displayed using a combination of varying intensities of 3 different colors, RGB*. When all set to the highest level, it results in white, and when all are set to zero, black results, similar to painting on a physical easel. Bit depth, or color depth, defines the amount of a pixel’s information that can be stored and specified. Higher values, or more bits, equals finer color detail. However, with an increase in bit depth, there is a corresponding increase in memory footprint needed to store this extra information, as well as more data for the graphics card to process that reduces the maximum refresh rate possible.

|

Color Depth |

Number of Displayed Colors |

Bytes of Storage Per Pixel |

Common Name for Color Depth |

|

4-Bit |

16 |

0.5 |

Standard VGA |

|

8-Bit |

256 |

1.0 |

256-Color Mode |

|

16-Bit |

65,536 |

2.0 |

High Color |

|

24-Bit |

16,777,216 |

3.0 |

True Color |

The table** shows how as the number of colors displayed and quality increases, there is an increase in pixel storage bytes, from 0.5 bytes for a bit depth of 4, that increases six-fold to 3.0 for a 24-bit color depth. Using the 8-bit row as a launching pad, it states a value of 256 for the number of displayed colors. It does this by having a palette containing 256 colors and their defining arbitrary 3-byte color definition. Assigning a pixel a “color number'” results a look-up and coordination with the matching color in the palette. 256- color is the standard for most computing: while poor quality, its is more compatible than “truer-color” but more memory intensive alternatives.

The formula to calculate video memory storage size at a given resolution and bit depth is:-

Memory(MB or MByte) = (X_resolution*Y_resolution) / (8 * 1 048 576).

The numerator is the product of the corresponding commonplace display resolution values, e.g. 640×480. The denominator is 8 bits (that make a byte) multiplied by 2^20 or 1,048,576 to convert the byte to a megabyte(technically a million bytes, but base 2, not base 10).

Therefore,

configuration a1 ==> 1 frame = 640 pixels long by 480 pixels wide

total pixels = area = 640*480 = 307200 (pixel area)

307200/8,388,608 bytes or a 0.03662109375 Mbyte or 36621.09375 byte buffer just to store one frame if using base 10.

If alternatively using base 2 to convert to mebibytes, 38400 byte buffer.

config a2 ==> 1280*1024 = 1310720/8,388,608 Mbytes = 0.15625 Mbytes, or 156,250 (base 10) or 163840 (base 2) byte buffer just to store one frame.

config b1 ==> (1024*768) /8,388,608 Mbytes = 0.09375 Mbytes, or 93,750 (base 10) or 98304 (base 2) byte buffer just to store one frame.

config b2 ==> (2560*1600)/8,388,608 Mbytes = 0.48828125 Mbytes, or 488281.25 (base 10) or 512,000 (base 2) byte buffer just to store one frame.

* Pixel data from PCGuide.com ( http://pcguide.com/ref/video/modesColor-c.html )

** table from PCGuide.com ( http://pcguide.com/ref/video/modesBuffer-c.html )

1.2.2. How many frames could it store, assuming the memory contains no other information?

After converting the memory values per configuration to bytes (2*10^9:base 10 or 2.147*10^9:base 2, and 4*10^9:base 10 or 4.295*10^9:base 2), to calculate the number of frames stored in pristine main memory, we divide memory_available / memory_per_frame.

(Final frame values have been rounded down since incomplete frames would be clipped or not count as a full functional frame.)

config a1 ==> (2*10^9:base 10) / 36621.09375 = 54613.3333333 or 54613 frames

(2.147*10^9:base 2) / 38400 = 55911.4583333 or 55911 frames

config a2==> (4*10^9:base 10) / 156,250 = 25600 frames

(4.295*10^9:base 2) / 16384 = 262145.996094 or 262145 frames

config b1 ==> (2*10^9:base 10) / 93,750 = 21333.3333333 or 21333 frames

(2.147*10^9:base 2) / 98304 = 21840.4134115 or 21840 frames

config b2==> (4*10^9:base 10) / 488281.25 = 8192 frames

(4.295*10^9:base 2) / 512,000 = 8388.671875 or 8388 frames

1.2.3. If a 256 Kbytes file is sent through the Ethernet connection, how long would it take?

We first need to ensure similar units, and we convert from Megabits and Gigabits, to Megabytes and Gigabytes. 1 byte is 8 bits, 1 bit is .0.125 bytes, and therefore 1 Gigabit is 0.125 Gigabytes. 100 Megabits is (100Megabits*0.125) or 12.5 Megabytes. Further matching the units up, 1 Gigabyte is 1,000,000 kilobytes, 0.125 GB = 125,000 kilobytes. 1 Megabyte is 1000 kilobytes, 12.5 MB = 12,500 KB.

Noteworthy: Ethernet rates are commonly expressed in datagram or frame size per second.

a1 ==> If the pipe is 100Mbits, or 12,500 Kb, how long would it take to send a 256 KB file through?

12,500 kilobytes per second/ 256 kilobytes = 48.828125 seconds.

a2 ==> 125,000 kilobytes per second/ 256 kilobytes = 488.28125 seconds.

b1 ==> 12,500 kilobytes per second/ 256 kilobytes = 48.828125 seconds

b2 ==> 125,000 kilobytes per second/ 256 kilobytes = 488.28125 seconds

Use the access times, for every type of memory, contained in the table to answer the following questions.

Cache memory is a a reserved section of memory that makes it high speed SRAM* or static RAM. Programs typically access the data or instructions in here frequently, and therefore keeping it readily accessible by reserving it statically ensures extremely fast access and fetch-decode times. Today, there are typically 3 caches: L1, L2, and L3.

L1 is the Level 1 or primary or internal or system cache. It is built into the processor and is the fastest & most expensive. It stores the most critical execution files and is a processor first point of contact when performing instruction. L2 is the Level 2 or secondary or external cache. It was originally located on the motherboard in older computers but is currently placed on the processor chip. While it is on the same processor chip and uses the same die (= small block of semiconducting material on which a given functional circuit is fabricated, and this single wafer is then actually cut up into chunks of electronic-grade silicon or EGS, that each contain one copy of the functional circuit) as the CPU, it is still not considered part of the CPU core. What used to be the L2 cache before the L2 cache was moved to the processor, is now correctly known as L3 cache, is located on the motherboard and can refer to a shared resource between cores.

DRAM is Dynamic RAM*, and was first invented & patented by Robert Dennard in 1968, and released commercially released by Intel in 1970. It stores its information in a cell containing a capacitor and transistor, necessitating a constant need for refresher electrical current every few milliseconds to allow the memory to keep its charge and hold the data. It is volatile and is lost upon power loss, as is any data contained in this type of memory.

A capacitor**, or a condenser, is a passive (passivity can be either thermodynamic [consumes but doesn’t produce electricity] or incremental [incapable of power gain/ does not amplify signals]), 2 terminal electrical component that store energy electrostatically (akin to stationary or slow-moving electric charge with no acceleration, unlike magnetism or electrodynamism) in an electric field. The basic principle is at least two electrical conductors separated by a dielectric/ insulator, the latter in which an electric field develops across when there is a potential difference, causing one plate to develop a positive charge and the other a negative charge.Energy is stored in the resulting electrostatic field, and if ideal, would be a single constant value known as capacitance, and increases as the space between the conductors shrinks while the size of the conductors (plates) increases. Electric potential is defined as the work done in moving a unit positive charge from infinity, considered to be zero, to that point. Objects possess an electric charge, i.e. the number of electrons that they can lose in a reaction. Electric fields exert force on charged objects. If an object has a positive charge, the force will be in the direction of the electric field. The electric potential refers to the field, and not the particle (object). This can either refer to a dynamic field at a specific time, or a static/ time-invariant field. Electrostatics*** involves buildup of charge on a surface due to contact with other surfaces, much like the interaction between hand and a thin plastic wrap when opening an Amazon package, as agitated electrons are transferred. In DRAM, capacitors store information in binary forms****, i.e. an “ON” charge represents a binary 1, while “OFF” represents a binary 0. (A good analogy, no pun intended, is a bucket that one can fill with a hose. When the bucket is empty (equivalence of no charge), this can represent a binary 0. As you turn the tap on, and water starts to fill the bucket, the state changes from empty to full (or ~charged with electrons) and equivalent to a binary 1. As we examine how computers speak in binary, making the abstraction from physics and electricity to digital signals that can use logic to perform certain functions is not too far a leap.)

A transistor is not quite passive, but is considered locally active. Since a capacitor is passive and cannot neither amplify signal nor produce electronic signals or electrical power, this omnipresent device made of semiconductor material acts as the conduit. They can either be used for switched-mode power supply in electronics or are the building blocks of transistor logic. Consisting of at least 3 terminals for connection to an external circuit, voltage or current applied to one terminal pair changes current through another pair. Thousands of transistors can be combined in a single chip in a process known as VLSI (very large scale integration), a practice started in 1970 that in conjunction with complex semiconductors led to great communication breakthroughs such as microprocessors. A transistor’s usefulness stems from its amplification ability of a small signal to a much larger and controllable signal in a different pair of terminals. In addition to acting as a signal amplifier, it can also be used as an electrically controlled switch, turning current on and of. There are two types: bipolar, that have 3 terminals [a base terminal that is the current input point, and a collector and an emitter terminal, where varying current exits] or field-effect transistors, that have 3 terminals with voltage at the gate controlling current between the source and the drain.

As base voltage increases, emitter and collector currents both rise exponentially, and this amplification aspect is the gain. Collector voltage drops due to reduced resistance from collector to emitter. If voltage difference were near or were zero, collector current would only be limited by load resistance and supply voltage. This phenomenon is known as saturation because current flows freely from collector to emitter. When saturated, a switch is “on”; input voltage can be modified to result in a zero output, or effectively turn the switch completely “off”.

Ohm’s Law states, “The current through a conductor between 2 points is directly proportional to the potential difference across the two points”. Voltage is always expressed in terms of two points.

Flash storage is electronic, non-volatile storage that can be electronically programmed, erased and re-programmed. They developed from EEPROM (electrically erasable programmable read-only memory) and come in two types: NAND, that can be read/written in block or pages that are generally smaller than the entire device, or NOR, that allow single bytes (machine words) to be written independently. NAND are primarily used in main memory, memory cards, USB flash drives, and SSDs, while NOR are found at the machine code level.

Each memory cell is similar to a metal-oxide semiconductor field effect transistor, or MOSFET, that is used to amplify or switch signals. The difference is that the transistor in this case has an extra gate. On top is a control gate, however, in addition, there is a floating gate below it that is completely insulated by an oxide layer. Due to this insulation, any electrons trapped inside it will not be discharged any time soon. In addition, it screens or cancels out the electric field from the control gate. Current flowing through the MOSFET channel is sensed when a voltage intermediate is applied to the CG between possible threshold voltages, making the channel conduct. The MOSFET channel’s conductivity is tested if conducting or insulating, and if conducting, forms a binary code, reproducing stored data. Reading and writing is done using tunnel injection and tunnel release that involve transmission of charge carriers.

Magnetic storage is the most commonly used form of non-volatile storage today. It includes hard disks(HDDs), floppy disks, magnetic recording tapes, and magnetic stripes (e.g. on credit cards). There are four types: analog, digital, magneto-optical and domain propagation.

Analog recording is done by using the writing head in applying current proportional to a specific signal to a demagnetized tape. This achieves a magnetization distribution along the tape, that enables reproduction of the original signal. Commonly used magnetic particles are iron oxide or chromium oxide.

This has been gradually replaced by digital recording, that only needs +Ms and -Ms in the hysteresis loop. Hysteresis is the dependence of a system on both its current and past environment that arises because the system can be in more than one internal state. Loops can occur because of either alternating input/ output as well or as a result of dynamic lag between input/ output.

Magneto-optical recording read/write optically, i.e. magnetic medium is heated locally by a laser, as opposed to a current.

Domain propagation memory, aka bubble memory, in which domain wall motion is controlled. A magnetic domain is a region within a magnetic material which has uniform magnetization. individual magnetic atomic moments are aligned with each other and pointing in the same direction. These domains are what are also termed as bubbles, alluding to the cylindrical shape. Domain walls are an interface that separate magnetic domains. Each bubble holds one bit of data. Bubbles are read by moving them to the nearer edge of the material, and written at the far edge, resulting in delay line memory system. This idea was abandoned with the onset of more popular alternatives, but have seen a revitalizing surge inthe form of MIT researchers who demonstrated bubble logic at the nano level are 3 ms faster than present hard drives as well as IBM researching improvements in memory using domain wall memory (DWM).

The chart below, from TechOn.com (http://nkbp.jp/1e31pMj), illustrates the intricacies of memory:

1.2.4. Find how long it takes to read a file from a DRAM if it takes 2 microseconds from the cache memory.

5ns _takes_> 2 µs

DRAM is in in ns, therefore convert all units to ns.

2 µs = 2000 ns.

Cache memory takes .1 or 1 tenth of the time that DRAM takes to access storage. Therefore, if cache memory takes 2000 ns to read a file, it would take 10 times longer or 2000*10

= 20,000 ns.

1.2.5. Find how long it takes to read a file from a disk if it takes 2 microseconds from the cache memory.

Disk takes a whopping 5 milliseconds, or 5,000,000 ns. This means that cache (5 ns) accesses memory a million times faster. If a read operation costs cache 2000 ns, it would take disk 1,000,000 times longer, or 2000*1,000,000 or 2,000,000,000 ns.

1.2.6 Find how long it takes to read a file from flash memory if it takes 2 microseconds from the cache memory.

Flash takes 5 µs, or 5000 ns. This means that cache (5 ns) accesses memory a thousand times faster. If a read operation takes cache 2000 ns, it would take flash 1000 times longer, or 2000*1000 or 2,000,000 ns.

References:

*ComputerHope.com (http://www.computerhope.com/jargon/c/cache.htm)

**Capacitors ( https://en.wikipedia.org/wiki/Capacitor )

***Electrostatics ( https://en.wikipedia.org/wiki/Electrostatic )

**** Binary equivalents of +ve/-ve charge ( http://download.micron.com/pdf/education/k12_pdf_lessons/capacitor.pdf )cbg