AI: Intelligent Agents (HWK_II)

EXERCISES

2.1 Suppose that the performance measure is concerned with just the first T time steps of the environment and ignores everything thereafter. Show that a rational agent’s action may depend not just on the state of the environment but also on the time step it has reached.

In the simulated vacuum agent’s world, each time step is an action. It is a step forward in linear time, not necessarily physical distance. However, each step has an associated cost, be it in power/ energy consumption, noise created, or wear and tear. An efficient and rational agent would maximize performance while mitigating all costs possible. The opportunity cost of doing nothing and saving on all other costs, is that the floor remains dirty and the agent fails. The opportunity cost of attempting to clean the floor is the aforementioned costs or penalties, i.e. power, noise, wear & tear, etc. By taking only as many time steps as would solve the dirt problem and no more, this agent would have performed not only rationally, but scored well on the performance that checks for efficiency and self-preservation. If an agent cleans one square, cleans another, and then cleans the previously cleaned square again, it would be wasting time and power, as well as increasing time and wear & tear. However, if it can efficiently track cleaned and dirty squares, and work out a way of ideally visiting a square only once, this would result in the best-case scenario, mitigated time steps, that would translate into time, noise, power and depreciation savings. If it has a maximum of 200 steps, as the ideal case in which it visits a step only once, 201 steps means there was a “glitch in the matrix”. Unnecessary steps take it closer to both the lifetime that might have been set by its programmers as well as the physical lifetime limit caused by regular wear and tear, therefore, unnecessary steps should be viewed as an undesirable action and mitigated against.

2.2 Let us examine the rationality of various vacuum-cleaner agent functions.

a. Show that the simple vacuum-cleaner agent function described in Figure 2.3 is indeed rational under the assumptions listed on page 38.

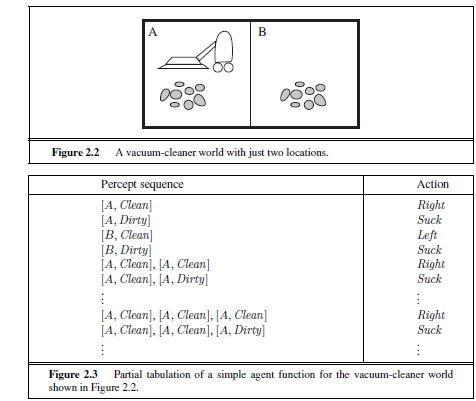

Figure 2.3:

Assumptions:

• The performance measure awards one point for each clean square at each time step,

over a “lifetime” of 1000 time steps.

• The “geography” of the environment is known a priori but the dirt distribution

and the initial location of the agent are not. Clean squares stay clean and sucking

cleans the current square. The Left and Right actions move the agent left and right

except when this would take the agent outside the environment, in which case the agent

remains where it is.

• The only available actions are Left , Right, and Suck.

• The agent correctly perceives its location and whether that location contains dirt.

Since there are only two tiles in this case, the logic is greatly simplified. If this tile is dirty, move to the other tile that is only one direction over. If either tile is dirty, clean it. If not, move over to the other tile. Ideally, there would be some way of preventing or slowing down traversal between tiles once both are clean, but for simplicity’s sake, this is sufficiently rational enough.

b. Describe a rational agent function for the case in which each movement costs one point. Does the corresponding agent program require internal state?

In this environment where there is a penalty for a step, it would be critical to maintain a state of previously traversed or visited steps to avoid revisitation or even worse, the agent not knowing where it is in space, which would mean all previous progress has been lost. By maintaining state and keeping track of visited tiles, an agent can map an impression of the world it has thus visited, and learn about the rest of the unknown world as it moves through it.

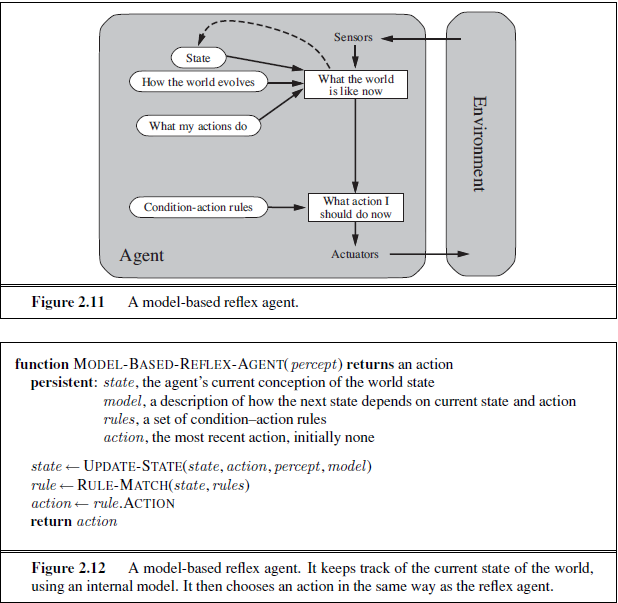

Below is a model-based reflex agent that is stateful and can keep updating its view or “opinion” or interpretation of the world it lives in, as well as using rules to ensure some meaningful performance measuring.

c. Discuss possible agent designs for the cases in which clean squares can become dirty

and the geography of the environment is unknown. Does it make sense for the agent to

learn from its experience in these cases? If so, what should it learn? If not, why not?

2.3 For each of the following assertions, say whether it is true or false and support your

answer with examples or counterexamples where appropriate.

a. An agent that senses only partial information about the state cannot be perfectly rational.

False. Depending on the scope or the amount of external stimuli, an agent might not need to sense information en masse, only detecting a small targeted amount of state identifiers might suffice for its purposes. If an agent, for example, is stationary, detecting its position in space would be null. Detecting other objects in motion around it might suffice to ensure the agent continues to function and head to a goal state without fully sensing statefulness.

b. There exist task environments in which no pure reflex agent can behave rationally.

True. As an environment increases in chaos and unpredictability, even a smart agent, including a smart human being, cannot behave rationally. A good example is Dr Malcolm in Michael Crichton’s Jurassic Park, who is a chaos theory mathematician. He states that as a system gets more complex, the outcomes get more random and harder to predict. Therefore, if a vacuum agent exists in a vacuum where dirt is arbitrarily introduced, it would be easy to monitor and measure performance. If the same vacuum agent is then placed in a real-world environment, with pets and toddlers and kicked-off shoes and the like, it must either have an learning and upgrading mechanism, that can map and remember how a running child differs from a coffee table leg. As this model increases, there are exponentially more choices, outcomes and even shifting goal states (e.g. shifting from delivering a formal report to running away from a T-Rex.

c. There exists a task environment in which every agent is rational.

True. In a primitive and overly simplistic environment, e.g. within a calculator’s world, 2+2 will always be equal to 4. All calculators (hopefully) know this simple truth. For this simple task in this predictable environment, any calculator will always perform rationally and predictably. As complexity and unpredictability rises, not all agents maintain their rationality.

d. The input to an agent program is the same as the input to the agent function.

False. The agent function is an abstract mathematical description; the agent program is a concrete implementation, running within some physical system. Generally speaking, the input might be similar, but typically the input formats are completely different. The agent function defines action, rather, it maps any given precept sequence to an action. The agent program is the internal implementation of an agent. Therefore, while a precept or an input for both the program and the function might be “COLOR”, the representation of what a color would vary vastly from analog sensing of what light frequencies are being reflected by an object, to translation of analog or physical input into binary language and machine language for abstraction and percept-rule matching, or taking action to get closer to the goal state.

e. Every agent function is implementable by some program/ machine combination.

True. In a well-designed agent, abstraction should be able to be implemented by its resources. There would be no point in having an internal abstraction that is unattainable by current physical resources. Each function should have a meaningful and possible program/ machine combination, otherwise is taking up space without any purpose for the moment, until physical resources can be upgraded.

f. Suppose an agent selects its action uniformly at random from the set of possible actions. There exists a deterministic task environment in which this agent is rational.

True. A clock is right twice in a day, and the same concept would hold true here. It would be assumed that at some point, and especially in a smaller and finite number of choices and outcomes, random selection of action could possibly result in a rational decision. Assume an agent is moving forward in incremental time steps, and only has two options of brake or accelerate that it can choose randomly per time step. Based on simple probability, this agent will brake when there is an obstacle or accelerate when there are no obstacles only half the time(act rationally). It will crash into obstacles half the time, and will also brake when there are no obstacles half the time (act irrationally). However, since this is simply up to chance and entropy, it could, in theory, crash all the time into obstacles by never braking, or brake continuously without the presence of obstacles or ever moving. This is clearly irrational.

g. It is possible for a given agent to be perfectly rational in two distinct task environments.

True. Environments can generally be governed by broader laws of physics and logic. Hitting a wall is usually not a good thing unless the agent is supposed to be a smart bulldozer. Therefore, as long as an agent can rationally interpret any environment and map it in a logical format that allows applying existing rules to ensure the agent’s persistence, survival and even thriving in most environments. Of course, if a different environment interferes with the agent’s stimuli collection, it might adversely affect the rationality of the agent.

h. Every agent is rational in an unobservable environment.

True. In an observable environment, an agent can observe and interact with the environment. If the environment is not observable to the agent, e.g. sending a Roomba to defuse a bomb or test for carbon monoxide in a building, the Roomba agent would be incapable of processing, interpreting and understanding the environment and could not be expected to act at all, much less rationally for that environment. However, in either building scenario, the Roomba could clean the floors, acting rationally in that environment of “clean or dirty” that it it can observe. There is no measure to use to compare performance in that environment, all you can measure is the agent’s performance to the metrics it understands. No one cares how many Ghz a blackboard duster has, one only cares how well it is able to erase chalk off a blackboard.

i. A perfectly rational poker-playing agent never loses.

False. Rationality and omniscience are starkly different. If I knew what would be on an test before I took it, I would be omniscient. If I was rational, I could predict what might be on a test based on what was on previous tests if any, what topics and sections we might have covered, previous students’ input, the instructors in class hints and directions, and guidelines on the syllabus. I would be able to go into a multiple choice test knowing I had all the answers within the test and could rationally eliminate and reason my way to a reasonable grade, no pun intended. Being omniscient, I would know every outcome and possibility, and in this godlike mode, I would not need to study for a test I already knew all the answers to the test, and a step further, I might even have my grade for the whole course.

Omniscient agents know the actual outcome of their actions and act accordingly. Poker is a game of chance and luck. There are things that humans and rational AI can do in common while playing poker, such as:

- Keeping track of how many cards are in a deck,

- How many players at a current game,

- How many cards have already been played and therefore eliminated from contention (aka card counting and frowned upon by the State of Nevada),

- How many suits there are (4), how many cards of each suit (13), and where they fall in (3) above,

- The possibility and probability of the next card in the deck, as well as what each player might have

- Other player’s reads and bluffs, e.g. by referencing facial recognition patterns with stereotypical “poker faces” from an ever expanding memory/ database as a person gets with experience

However, even with this basic toolkit and even more expansion, the randomness of cards cannot be perfectly predicted since, like the quantum thought experiment with Schrodinger’s cat. By picking a card, you change the whole world that the poker game exists in. Unless the agent is creating the cards or is able to directly view the whole deck at all times, it cannot guarantee a win, it can only guarantee a good possibility of breaking even or a loss assuming everyone is playing optimally.

2.4 For each of the following activities, give a PEAS description of the task environment

and characterize it in terms of the properties listed in Section 2.3.2.

• Playing soccer.

• Exploring the subsurface oceans of Titan.

• Shopping for used AI books on the Internet.

• Playing a tennis match.

• Practicing tennis against a wall.

• Performing a high jump.

• Knitting a sweater.

• Bidding on an item at an auction.

PEAS is an acronym that stands for agent performance measuring: Performance, Environment, Actuators, and Sensors.

The performance measure is a quantifiable definition of how an agent is acting, based off of what it can do and what it is supposed to do. A performance measure for someone reading this blog would be whether they were able to read English, and understand concepts presented in the blog.

2.5 Define in your own words the following terms: agent, agent function, agent program,

rationality, autonomy, reflex agent, model-based agent, goal-based agent, utility-based agent,

learning agent.

agent:

An agent is an entity in an environment. It is able to sense and interact with the environment as it perceives it. If you are reading this, you are an agent within the environment of this blog as you take in these words and keep scrolling; you are an agent in the environment of the Earth as you take in light that comes from the sun, and oxygen from plants, and succumb to gravity and atmospheric pressure to not float out of your seat; and the blood corpuscles within your veins are agents in your body’s environment that transport oxygen and nutrients to keep you alive long enough to finish this sentence, while your nerves are agents that transport electrical signals from different sensors to cause your actuators (eyes, mouth, limbs) to help you continue reading this blog.

agent function:

The agent function is a key-value table, that maps a particular input (key) to an action (value). It can be thought of as highly as morality, e.g. “should I steal a loaf of bread to feed my child?”, to intellect, e.g. answers to computer science questions, and as instinct e.g. jerking your hand back after touching a hot coal. It can be thought of as the ‘intelligence’ part of AI.

agent program: This is an abstraction of the function, or more particularly, the environment. If the problem is to add 2 to 2, then the actual math, i.e. 2+2 is the agent function. The agent program would be then needed to “transform” this into terms and items that the agent can solve, and translate back to the function’s terms and items. Therefore, it might represent the 2 as a binary digit, and assign a binary digit format to symbolize the add functionality.The next step would be to send electrical (or, soon, quantum) signals that represent 1s and 0s in specific patterns, and “compute” or calculate the result. It should then return an electrical pattern that symbolizes the correct number, 0100, that can then be converted to a 4 for our viewing consumption and enjoyment.

rationality: Simply put, being rational is being able to do something that makes sense. This is relative, and subjective. Doing the right thing might mean a different outcome from other entities in the environment, or to the environment itself. An action performed rationally might have domino or butterfly effects that trigger state changes or directly affect other environment agents/ entities. A Roomba might decide that some papers are trash, and suck them up. If those papers were sensitive, necessary information, the Roomba’s owner might be upset, but the Roomba was acting rationally, cleaning up what it perceived to be dirt. Rationality is hard to judge accurately, since we are not omniscient save for environments we create and/ or, more so, control. In a little program that we write and set the boundaries and all aspects within this digital world, we can judge rationality easier than in the real world, which we can only control to a limited degree.

autonomy: Autonomy is liberty: liberty from a creator’s predefined will. It comes from Latin auto, meaning “self”, and nomos, meaning “law”. It is the ability to exist or live by the law(s) that one creates or makes for themselves. An agent can be predisposed to certain rules and restrictions, but if autonomous, an agent should be able to take in a percept or percept sequence and make a decision. If the purpose of an autonomous military drone is to kill “the bad guys”, it needs to be able to determine what a “bad guy” is. If it determines this to be anyone with a gun, then woe be all combatants on our side and theirs. If it can discriminate and judge based on certain rules, e.g. uniforms, types of equipment, language, geo-location and coordination, or even “friendly” markers, then anyone not fitting the discriminating category would be fair game to this autonomous drone. It would not need to rely on what was pre-programmed as a “bad guy” but could determine for itself, and execute commands such as kill and destroy all non-friendlies.

reflex agent:

A reflex agent is like a goldfish: it only remembers and acts based on the last 30 seconds of its existence. If bumping into a wall resulted in a horrible crash last time, that will not register or matter, since a reflex agent acts reflexively, or reacts. Reflex agents live ignorantly but blissfully in Newtonian reaction, mechanically performing Action Z since Percept Y was observed, and not considering Percepts A – X, or Actions A – Y. Our knees have a patellar or knee-jerk reaction: sending a signal to the spinal cord and quadriceps, not even bothering to be processed in the brain or other high centers. This is good for events that have real-time constraints, such as balance or life-and-death scenarios, when we don’t want to have a philosophical pondering about whether that fire will singe or cook our fingers. A reflex agent would not care if in the past performing Action Z had adverse or irrational results, it would simply do.

model-based agent: Unlike the reflex agent, a model based agent constructs a model of its envrionment and can appear more rational. Humans can act as model based agents, especially in simplistic environments that need little deep thought. If my model (in my brain and understanding) of my house is of a two floor house with the bathroom upstairs, I can figure out how to get from my current location, up the stairs and into the location labelled bathroom. I do not need to understand why I need to go to the bathroom, but I do need to be able to formulate or conceptualize where the bathroom is and what a bathroom might look like. Model based agents are like children that are learning what the environment and entities within it are and do, as well as how to interact with them.

goal-based agent:

In the bathroom example defined above, it might be hard for a simple model-based, or more specifically, a model-based reflex agent would either randomly try every option in getting to the bathroom. This would involve taking a step forward and either proceeding with another step, or taking a step back, say if I had walked into a wall, and trying something new. In a goal-based agent, I would not only know my model of the environment, but I would also engage in some planning and searching for a rational way to get to the goal. I would work out the best way to the bottom of the stairs, the best way up each stair, and how to enter the bathroom.

utility-based agent: Per Ethics 101, a utilitarian seeks to improve happiness by being practical. Jeremy Bentham, defines utility as the greater good as opposed to the good of the one. An agent that seeks utilitarian principles would seek to have good outcomes, since the ends justify the means. If the end was world peace, in the case of the autonomous drone mentioned earlier, destroying targets that the drone’s creators defined as enemies of this goal, would be rational ( and ergo, viable) means to attain this end. This is where performance measures come into play, but within the agent program, where it scores viable actions based on percept sequences, and based on the score, executes an action. In the philosophical world, stealing a loaf of bread would have a worse outcome if I was caught stealing it, but would have a better outcome if I was not caught and my family did not go hungry. If I was caught, I could never provide for them in the future and therefore, as a rational agent, I might choose a night of hunger as opposed to a lifetime of hunger based on my current action, or lack thereof.

learning agent: An agent that is introduced into a foreign environment can learn the environment. Placing a newborn into the world is a demonstration of this concept. Each new thing that the newborn sees is perceived and an experience is associated with that thing. If it is a source of sustenance, it makes sense to love that thing. If it provides misery, grief and pain, it might make sense to avoid that thing or at least mitigate one’s experience with it. Thus we form our personalities and outlooks based on this foreign environment’s entities and stimuli, and we learn to thrive in it (or die, if unlucky). A learning agent in AI would do the same, with limited skill since it has limited time, compared to the 5 million years’ head-start of evolution that humans have. We are getting better at bestowing intelligent in machines, and as these ghosts in machines become smarter and more human-like (or better, hopefully), we might have to start considering where to draw the line to not blur the distinction between man and machine.

We stumbled over here different web address and thought I might as well

check things out. I like what I see so i am just following

you. Look forward to going over your web page repeatedly.

I’m a but busy, but want to build this back up starting Jan.

Otherwise, thanks for the kind words and have a good one!

Pretty! This has been an incredibly wonderful article.

Many thanks for providing this info.